The National Data Service Consortium (NDSC) aims to enhance scientific reproduciblity in the Information Age by implementing interfaces, protocols, and standards within data relevant cyberinfrastructure tools/services towards allowing for an overall infrastructure enabling scientists to more easily search for, publish, link, and overall reuse digital data. Specifically, NDSC has as its mission:

...

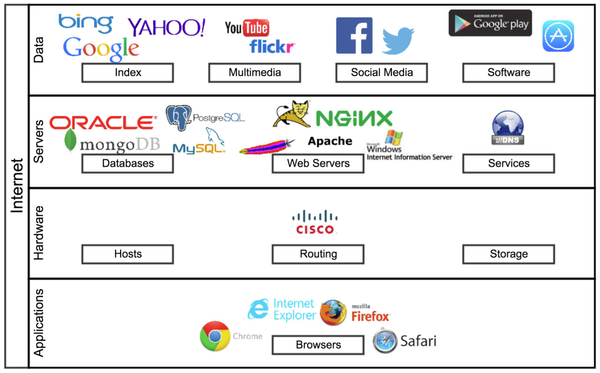

One might think of this in terms of the modern internet, which had its roots in the ARPANET effort ...

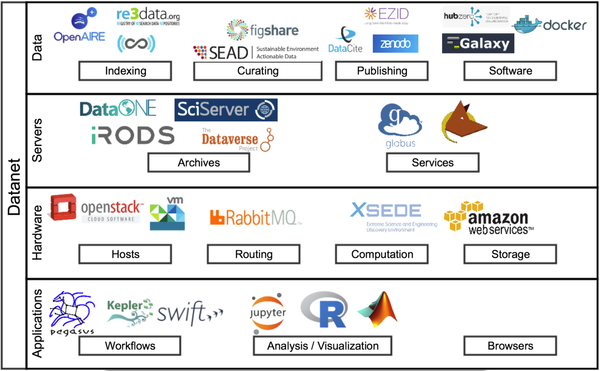

... taking into account additional components addressing "Big Data" challenges within the internet, a sort of DATANET:

The above is illustrative and not a comprehensive list of services. There are a variety of components being actively explored and developed, some building on top of others, some not, some interacting with others, some not. In essence, just like with every Internet component there are a variety of options for each Datanet component. Unlike the internet components, however, these selections matter - they effect the user and limit what other components might be utilized/deployed.

...

- Scientists have pinned down a molecular process in the brain that helps to trigger schizophrenia by analyzing data from about 29,000 schizophrenia cases, 36,000 controls and 700 post mortem brains. The information was drawn from dozens of studies performed in 22 countries. The authors stressed that their findings, which combine basic science with large-scale analysis of genetic studies, depended on an unusual level of cooperation among experts in genetics, molecular biology, developmental neurobiology and immunology. "This could not have been done five years ago".

- Researchers discover that rising atmospheric CO2 is resulting in less nutritious food, requiring 17 years of work across the literature of several disciplines.

... and in terms of the general public and broader impact, perhaps seeding a new kind of internet all can take advantage of:

- A child might ask their Apple TV or Amazon Echo the question, "What is the weather going to be like in the afternoon... 1000 years from now?". This will not result in an answer today, but theoretically could with several of the actively developed data components and interoperability between them. For example, ecological models such as ED, SIPNET, or DALEC within workflow engines such as PEcAn could pull data from Ameriflux, NARR, BETYdb, DataONE, and NEON, transfer data via Globus, convert data to model input formats via BrownDog, run models on XSEDE resources, and returns a specific result such as temperature/forecast estimate (likely several results from several models). The result can further include additional information such as "if you want to learn more" which links to summarized versions of publications for the utilized models and datasets with DOIs to published papers or datasets in Zenodo or SEAD or executable versions of the the tools themselves (possibly simplified) within HUBzero or Jupyter lab notebooks.

...